Efficient and Accurate Candidate Generation for Grasp Pose

Por um escritor misterioso

Last updated 13 abril 2025

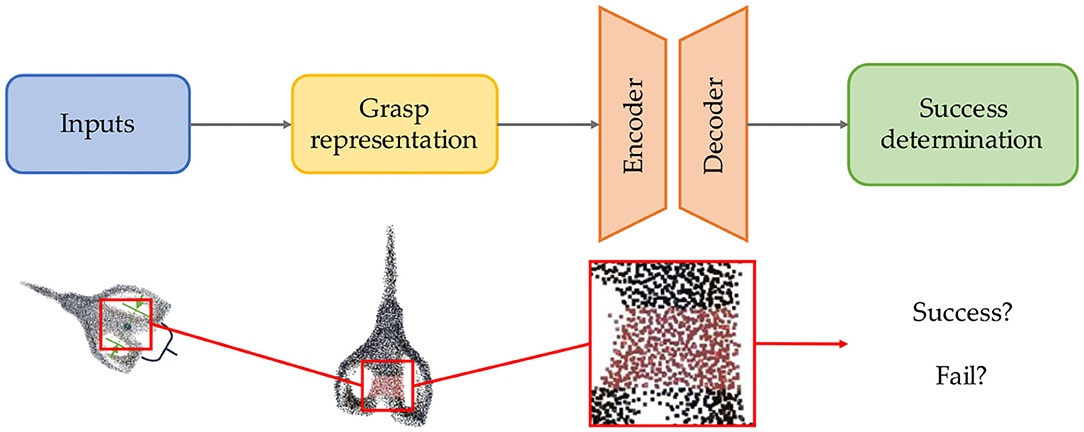

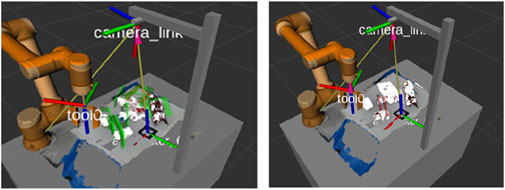

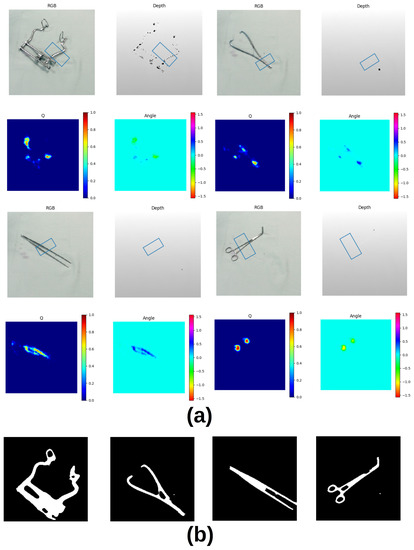

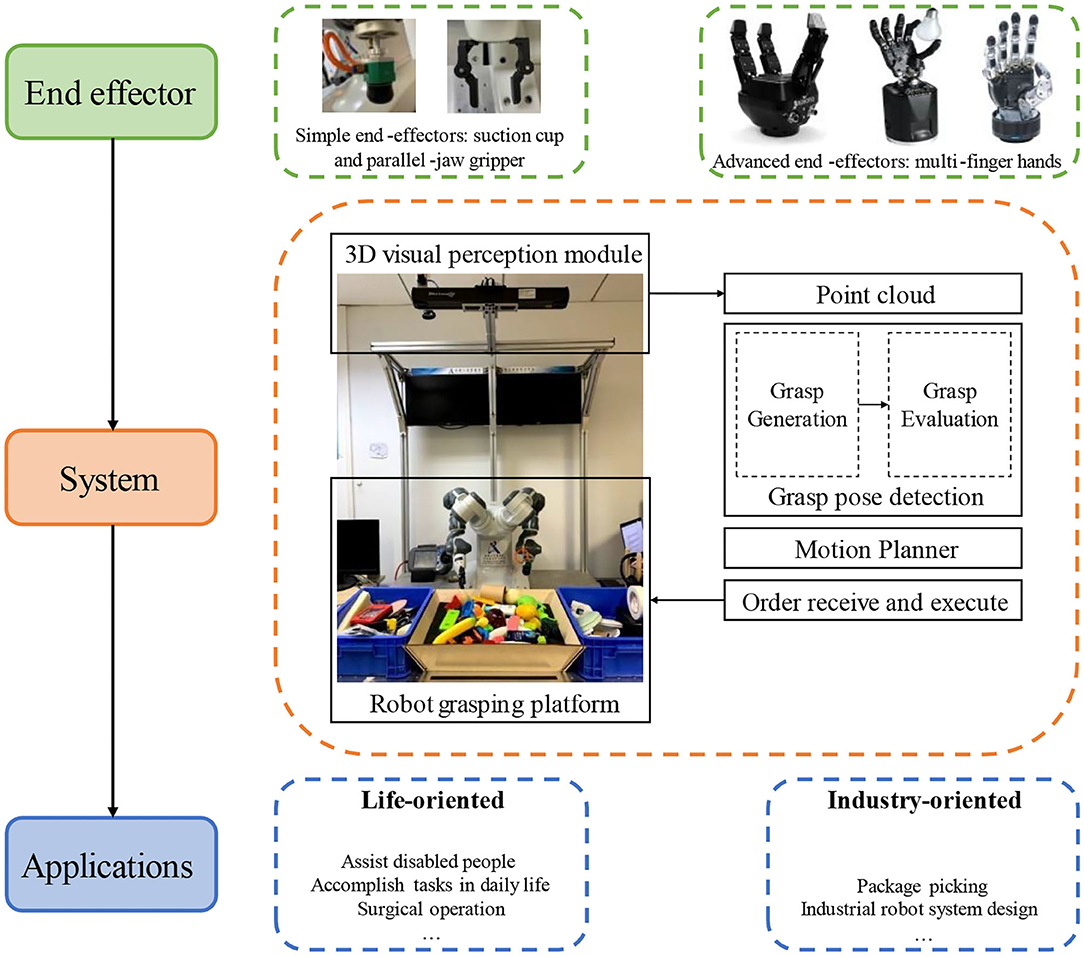

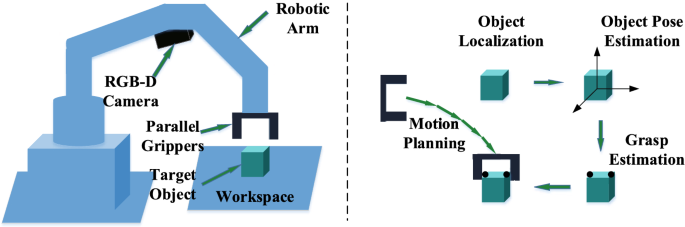

Recently, a number of grasp detection methods have been proposed that can be used to localize robotic grasp configurations directly from sensor data without estimating object pose. The underlying idea is to treat grasp perception analogously to object detection in computer vision. These methods take as input a noisy and partially occluded RGBD image or point cloud and produce as output pose estimates of viable grasps, without assuming a known CAD model of the object. Although these methods generalize grasp knowledge to new objects well, they have not yet been demonstrated to be reliable enough for wide use. Many grasp detection methods achieve grasp success rates (grasp successes as a fraction of the total number of grasp attempts) between 75% and 95% for novel objects presented in isolation or in light clutter. Not only are these success rates too low for practical grasping applications, but the light clutter scenarios that are evaluated often do not reflect the realities of real world grasping. This paper proposes a number of innovations that together result in a significant improvement in grasp detection performance. The specific improvement in performance due to each of our contributions is quantitatively measured either in simulation or on robotic hardware. Ultimately, we report a series of robotic experiments that average a 93% end-to-end grasp success rate for novel objects presented in dense clutter.

Robust grasping across diverse sensor qualities: The GraspNet

Frontiers Robotics Dexterous Grasping: The Methods Based on

Frontiers Learning-based robotic grasping: A review

Robotics, Free Full-Text

Left: A grasp g is defined by its Cartesian position (x, y, z

Frontiers Robotics Dexterous Grasping: The Methods Based on

Grasp Pose Detection in Point Clouds - Andreas ten Pas, Marcus

3D Grasp Pose Generation from 2D Anchors and Local Surface

Grasp pose representation in the camera frame

Vision-based robotic grasping from object localization, object

Recomendado para você

-

Candidate-se a formador(a) - Associação Educativa para o13 abril 2025

Candidate-se a formador(a) - Associação Educativa para o13 abril 2025 -

S.E. Cupp: DeSantis is a candidate without a cause or hope – News13 abril 2025

S.E. Cupp: DeSantis is a candidate without a cause or hope – News13 abril 2025 -

Se Candidate, Mulher!13 abril 2025

Se Candidate, Mulher!13 abril 2025 -

Candidate-se a um emprego e envie seu conceito de cv pessoas13 abril 2025

Candidate-se a um emprego e envie seu conceito de cv pessoas13 abril 2025 -

Candidate-se ao Conselho Participativo Municipal13 abril 2025

Candidate-se ao Conselho Participativo Municipal13 abril 2025 -

Novo Bauhaus Europeu Candidate-se até 28 de fevereiro aos13 abril 2025

Novo Bauhaus Europeu Candidate-se até 28 de fevereiro aos13 abril 2025 -

ISQ Venha fazer parte da equipa ISQ! Se é recém formado13 abril 2025

ISQ Venha fazer parte da equipa ISQ! Se é recém formado13 abril 2025 -

.jpeg?token=052669ca0c9b810520e95ec4afa4984d) Arquitetura e Engenharia: Candidate-se em vagas da semana de13 abril 2025

Arquitetura e Engenharia: Candidate-se em vagas da semana de13 abril 2025 -

Texto De Escrita De Texto Crédito Rápido. Foto De Negócios Apresentando Candidate-se A Um Empréstimo Demonstrativo Rápido Que Permite Que Você Pule Os Aborrecimentos Foto Royalty Free, Gravuras, Imagens e Banco de13 abril 2025

Texto De Escrita De Texto Crédito Rápido. Foto De Negócios Apresentando Candidate-se A Um Empréstimo Demonstrativo Rápido Que Permite Que Você Pule Os Aborrecimentos Foto Royalty Free, Gravuras, Imagens e Banco de13 abril 2025 -

Reforma Administrativa: candidate-se agora a uma vaga na Comissão13 abril 2025

Reforma Administrativa: candidate-se agora a uma vaga na Comissão13 abril 2025

você pode gostar

-

QUAL a MELHOR *HIE HIE* do ROBLOX ? BLOX FRUITS VS KING PIECE VS GRAND PIECE ONLINE !!13 abril 2025

QUAL a MELHOR *HIE HIE* do ROBLOX ? BLOX FRUITS VS KING PIECE VS GRAND PIECE ONLINE !!13 abril 2025 -

One Piece Episode 985 Review – MyNakama13 abril 2025

One Piece Episode 985 Review – MyNakama13 abril 2025 -

Record of Ragnarok chapter 56 in English spoilers - Shuumatsu no Valkyrie chapter 5613 abril 2025

Record of Ragnarok chapter 56 in English spoilers - Shuumatsu no Valkyrie chapter 5613 abril 2025 -

the sims 4 Bichos Geeks13 abril 2025

the sims 4 Bichos Geeks13 abril 2025 -

Is Asphalt 9 cross-platform Xbox and ps4?13 abril 2025

Is Asphalt 9 cross-platform Xbox and ps4?13 abril 2025 -

A Fazenda 2023 - Enquete: Qual o melhor nome da nova edição?13 abril 2025

A Fazenda 2023 - Enquete: Qual o melhor nome da nova edição?13 abril 2025 -

Toy Figure Фнаф 1 Fnaf Freddy Foxy Chica Bonnie Fnaf Animatronics13 abril 2025

Toy Figure Фнаф 1 Fnaf Freddy Foxy Chica Bonnie Fnaf Animatronics13 abril 2025 -

Boneco Figure Son Goku Dragon Ball Super Saiyajin 4 Bandai - Ban13 abril 2025

Boneco Figure Son Goku Dragon Ball Super Saiyajin 4 Bandai - Ban13 abril 2025 -

Speed Drawing 1st Try (Watercolor + Prismacolor) - Koori Style13 abril 2025

Speed Drawing 1st Try (Watercolor + Prismacolor) - Koori Style13 abril 2025 -

Precisei de opinião profissional no final… 🤨 Qual a sua ideia pra13 abril 2025