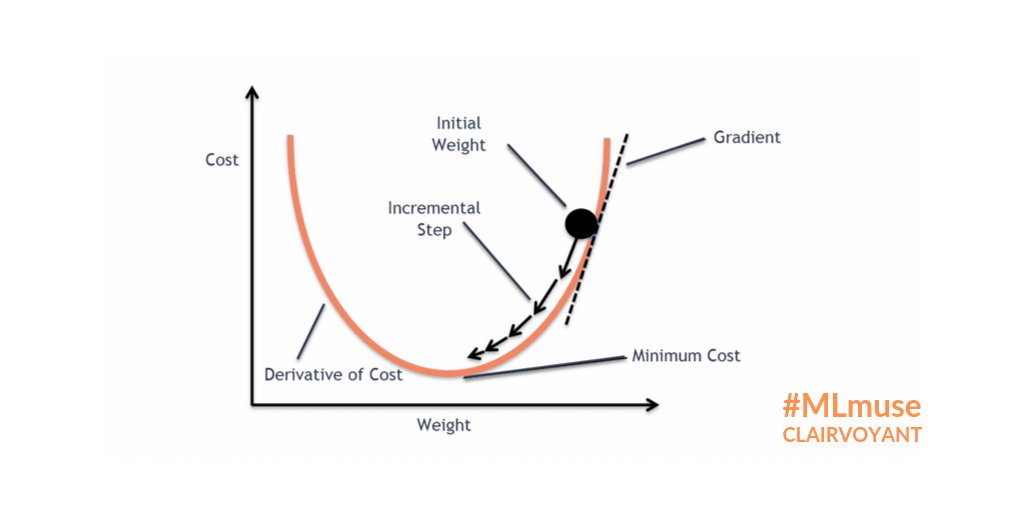

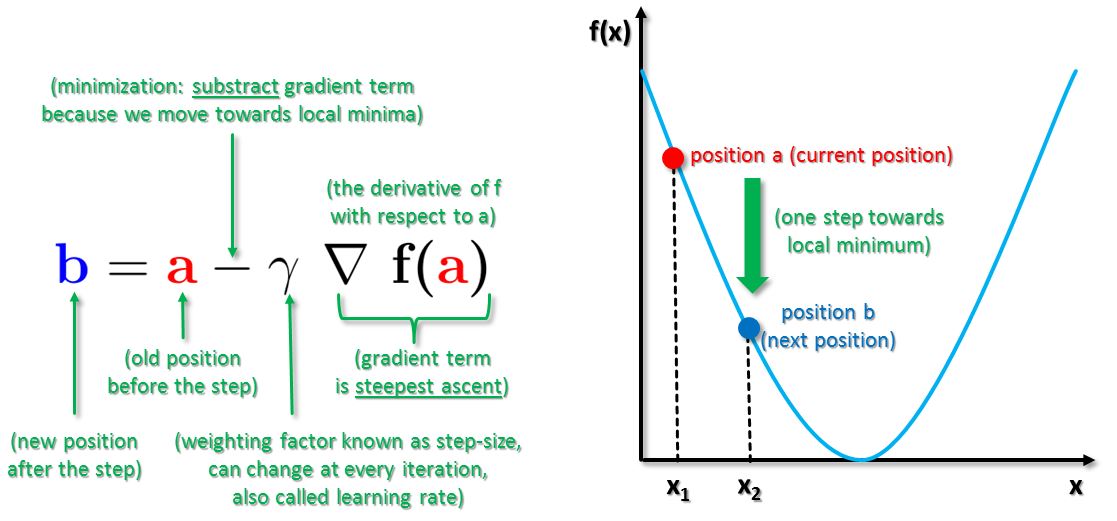

MathType - The #Gradient descent is an iterative optimization #algorithm for finding local minimums of multivariate functions. At each step, the algorithm moves in the inverse direction of the gradient, consequently reducing

Por um escritor misterioso

Last updated 11 abril 2025

A gradient descent algorithm finds one of the local minima. How do we find the global minima using that algorithm? - Quora

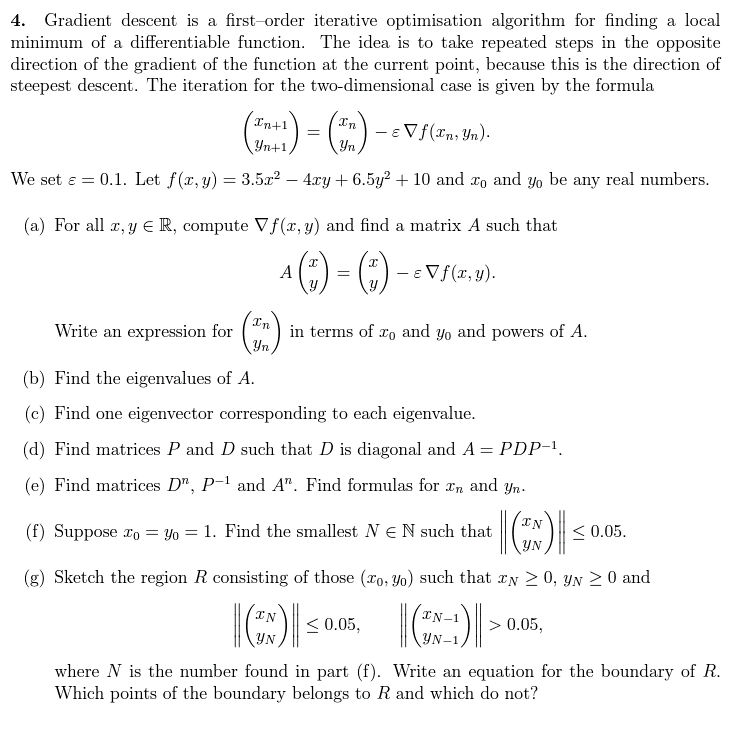

Solved 4. Gradient descent is a first-order iterative

How to implement a gradient descent in Python to find a local minimum ? - GeeksforGeeks

How to figure out which direction to go along the gradient in order to reach local minima in gradient descent algorithm - Quora

Optimization Techniques used in Classical Machine Learning ft: Gradient Descent, by Manoj Hegde

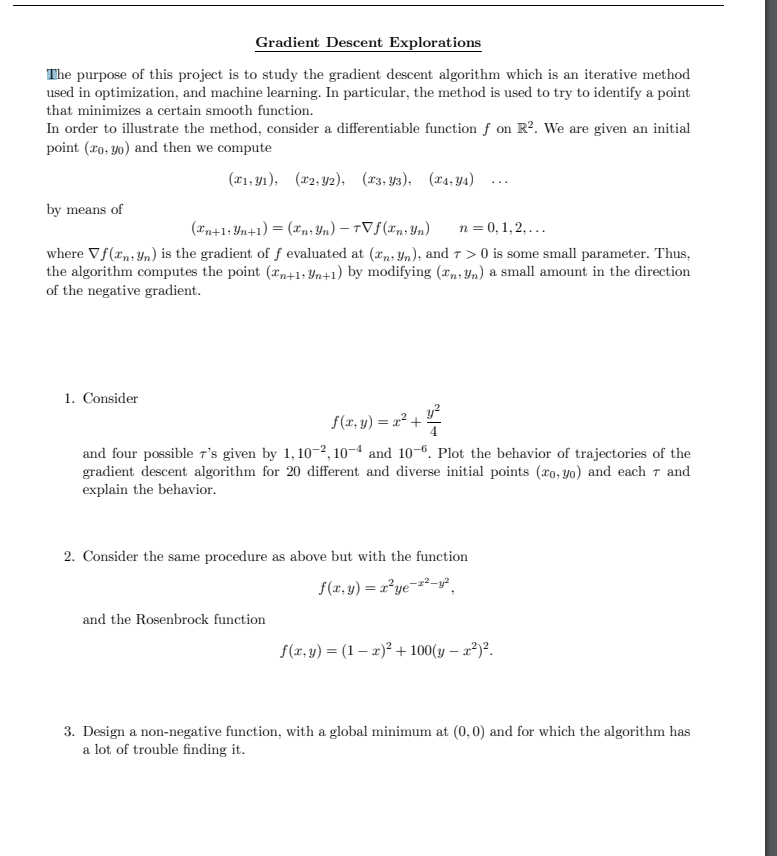

The purpose of this project is to study the gradient

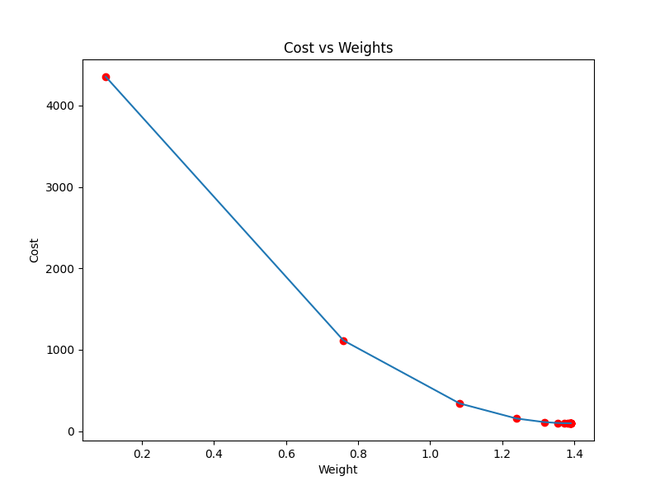

Gradient Descent algorithm. How to find the minimum of a function…, by Raghunath D

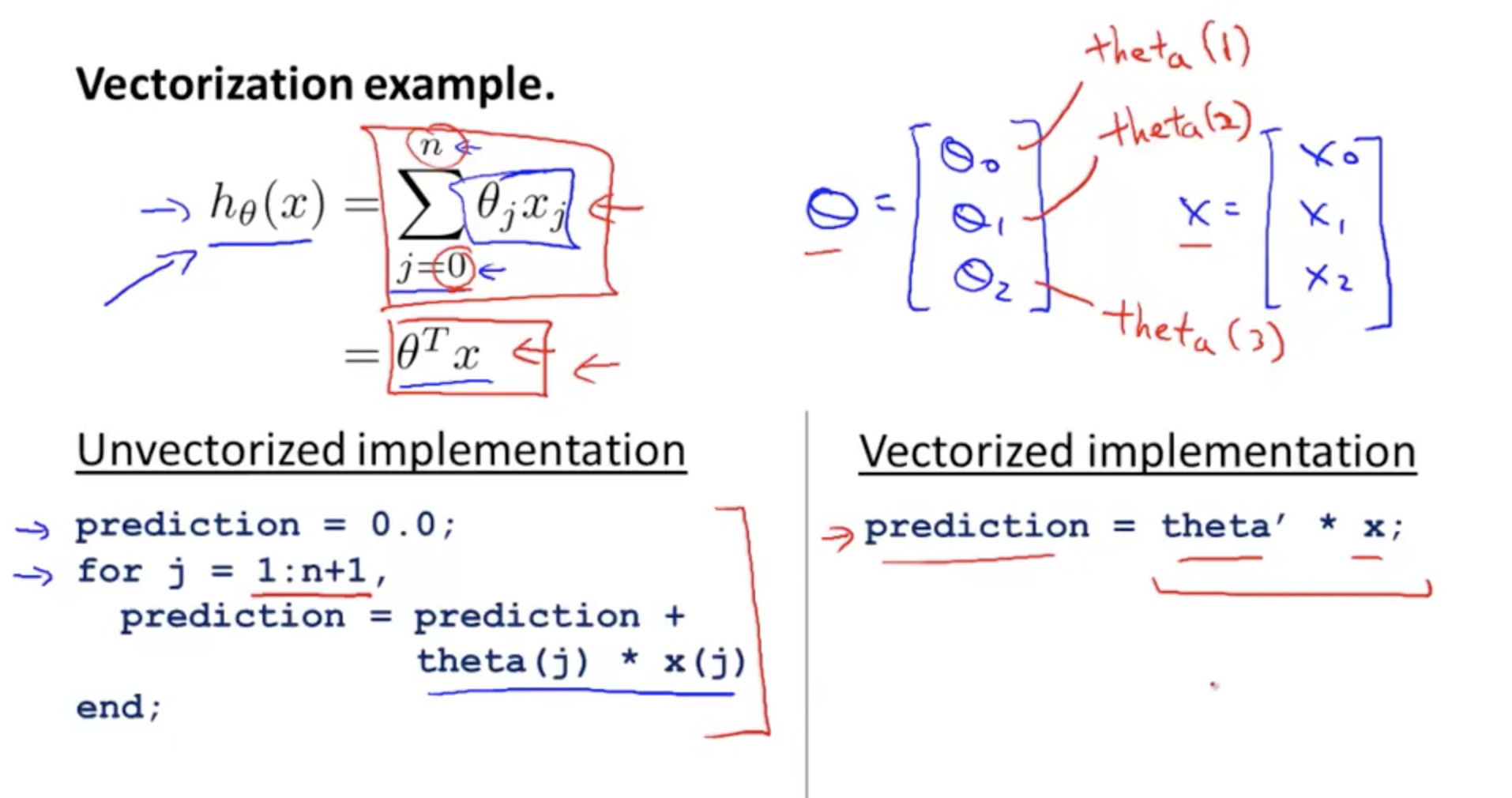

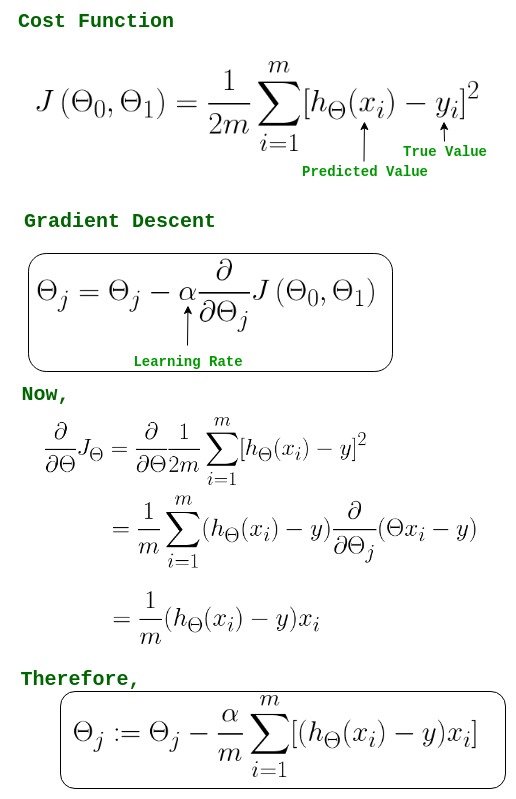

L2] Linear Regression (Multivariate). Cost Function. Hypothesis. Gradient

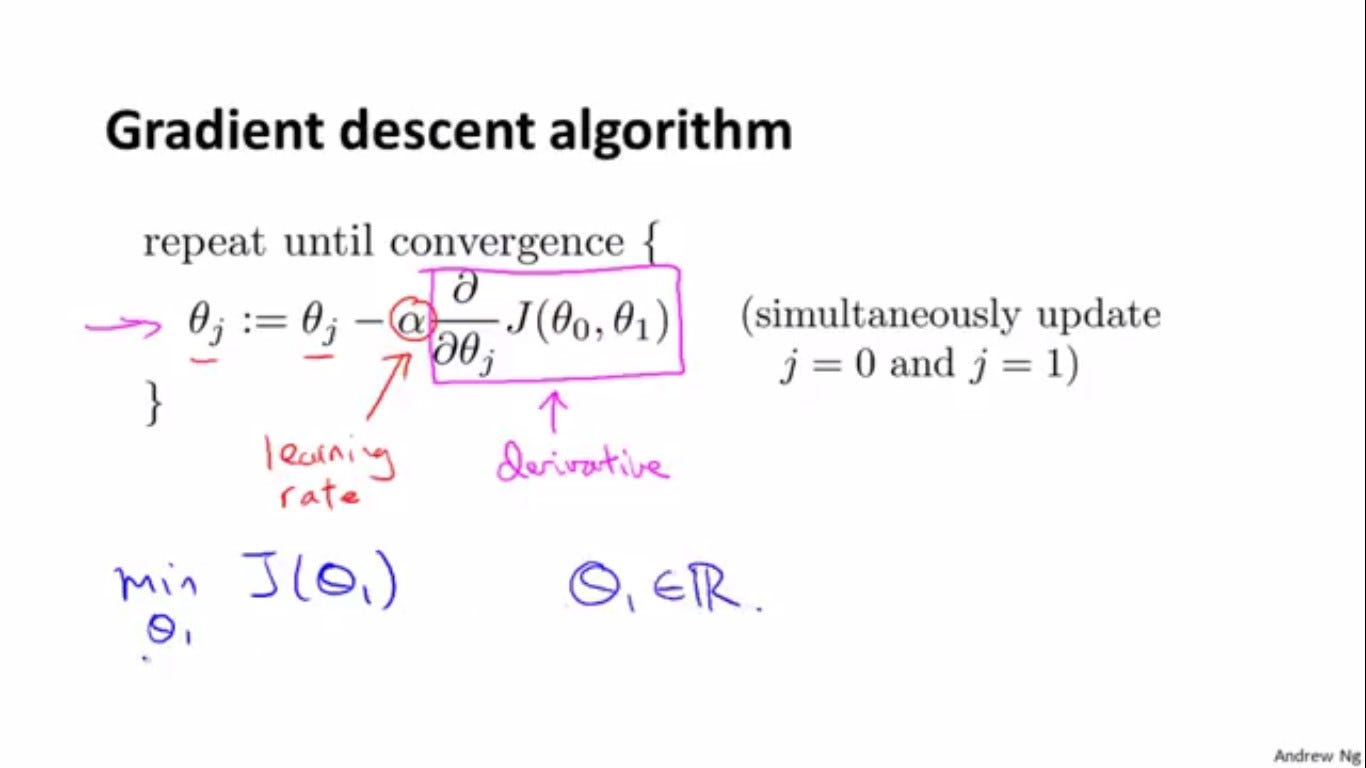

Gradient descent algorithm and its three types

Gradient Descent in Linear Regression - GeeksforGeeks

Recomendado para você

-

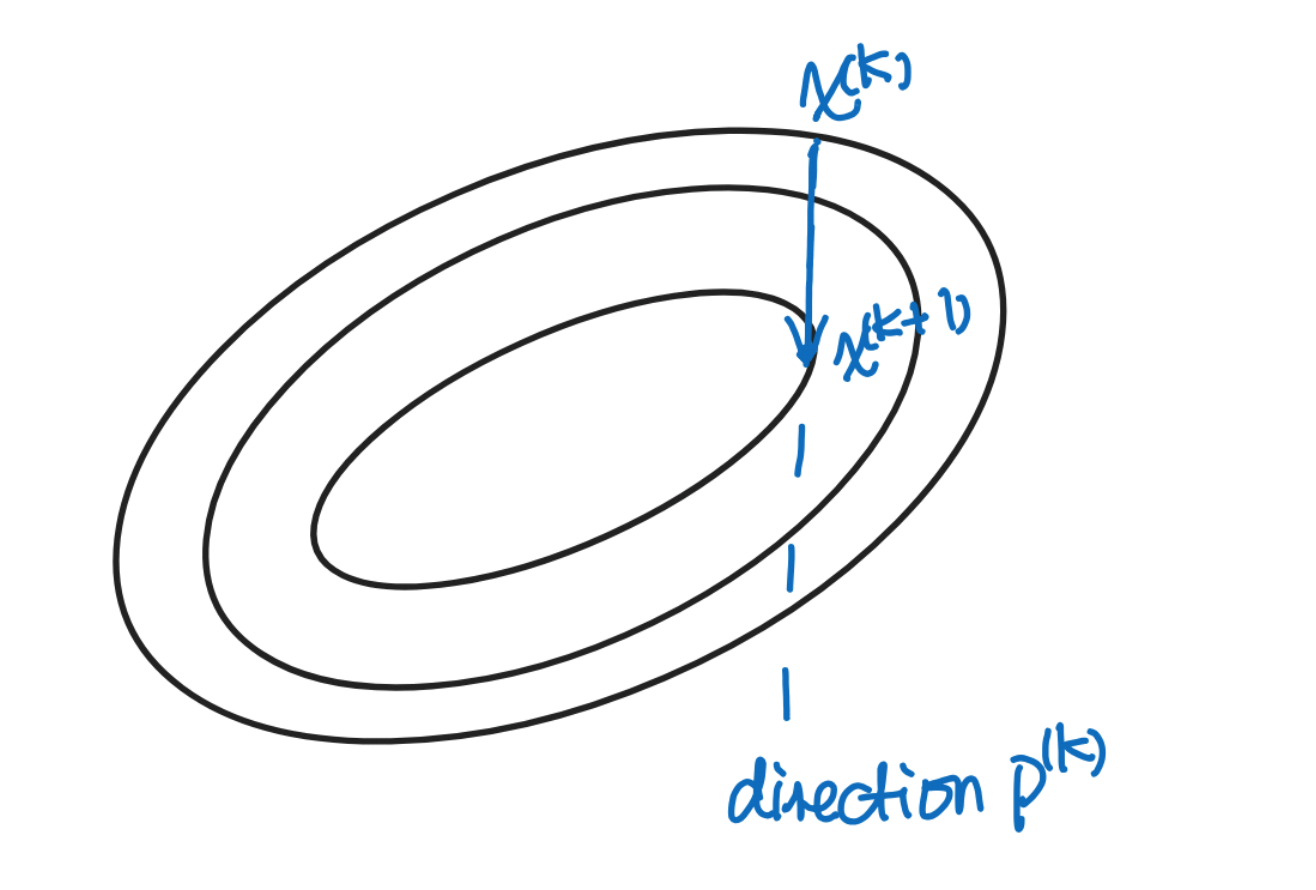

Introduction to Method of Steepest Descent11 abril 2025

Introduction to Method of Steepest Descent11 abril 2025 -

Descent method — Steepest descent and conjugate gradient, by Sophia Yang, Ph.D.11 abril 2025

Descent method — Steepest descent and conjugate gradient, by Sophia Yang, Ph.D.11 abril 2025 -

linear algebra - Preconditioned Steepest Descent - Computational Science Stack Exchange11 abril 2025

linear algebra - Preconditioned Steepest Descent - Computational Science Stack Exchange11 abril 2025 -

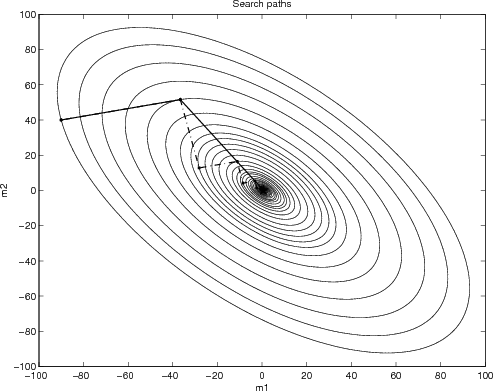

Illustration of the steepest descent method used to maximize the R11 abril 2025

Illustration of the steepest descent method used to maximize the R11 abril 2025 -

matrices - How is the preconditioned conjugate gradient algorithm related to the steepest descent method? - Mathematics Stack Exchange11 abril 2025

matrices - How is the preconditioned conjugate gradient algorithm related to the steepest descent method? - Mathematics Stack Exchange11 abril 2025 -

Machine learning (Part 8). Understanding the Role of Alpha and…, by Coursesteach11 abril 2025

Machine learning (Part 8). Understanding the Role of Alpha and…, by Coursesteach11 abril 2025 -

Why steepest descent is so slow11 abril 2025

Why steepest descent is so slow11 abril 2025 -

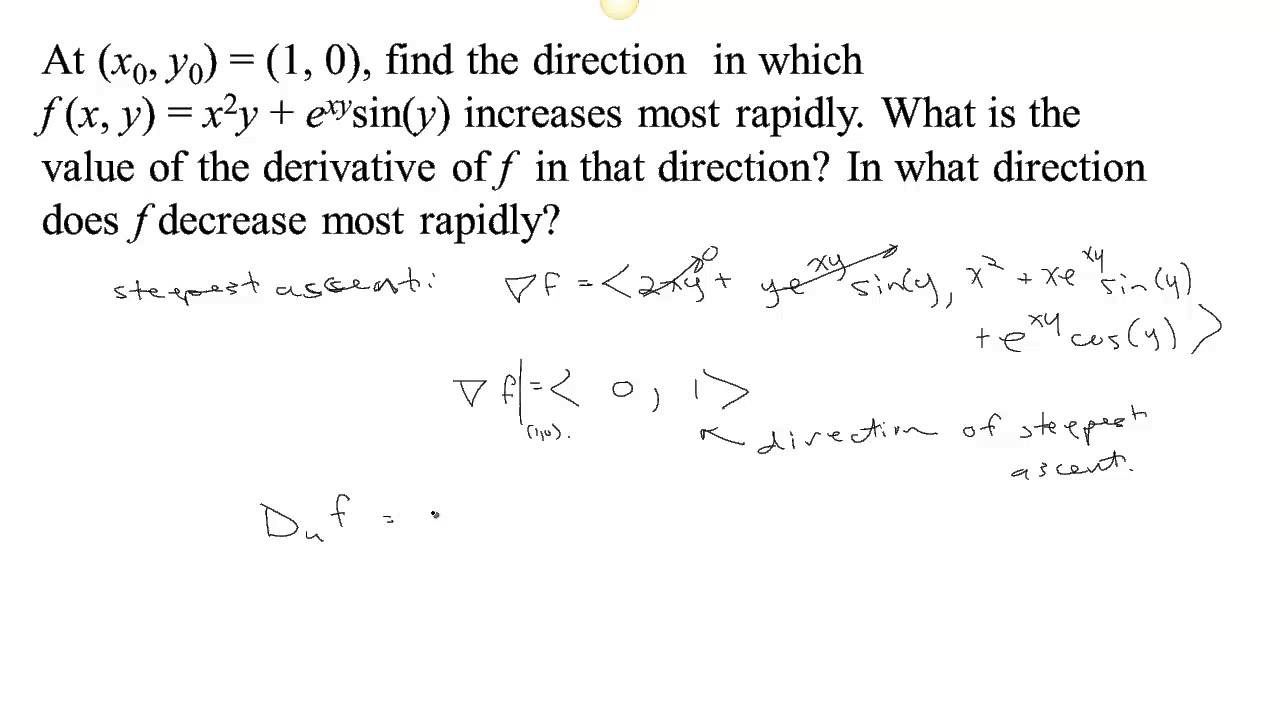

Steepest Ascent and Steepest Descent11 abril 2025

Steepest Ascent and Steepest Descent11 abril 2025 -

Gradient Descent Big Data Mining & Machine Learning11 abril 2025

Gradient Descent Big Data Mining & Machine Learning11 abril 2025 -

Steepest Descent - an overview11 abril 2025

Steepest Descent - an overview11 abril 2025

você pode gostar

-

Xadrez no design de camiseta11 abril 2025

Xadrez no design de camiseta11 abril 2025 -

Free Vector Swooshes, and Fancy Corner Designs. - Bittbox11 abril 2025

Free Vector Swooshes, and Fancy Corner Designs. - Bittbox11 abril 2025 -

Mushoku Tensei: Isekai Ittara Honki Dasu - Episódio 2 - Animes Online11 abril 2025

Mushoku Tensei: Isekai Ittara Honki Dasu - Episódio 2 - Animes Online11 abril 2025 -

Ores, Project Slayers Wiki11 abril 2025

Ores, Project Slayers Wiki11 abril 2025 -

Funko Bonecos Five Nights At Freddy's Pack 4 personagens FNAF - Funko - Toyshow Tudo de Marvel DC Netflix Geek Funko Pop Colecionáveis11 abril 2025

Funko Bonecos Five Nights At Freddy's Pack 4 personagens FNAF - Funko - Toyshow Tudo de Marvel DC Netflix Geek Funko Pop Colecionáveis11 abril 2025 -

League of Legends Tier List 2023: Best Champions To Pick11 abril 2025

League of Legends Tier List 2023: Best Champions To Pick11 abril 2025 -

Arifureta – From Commonplace to World's Strongest: Anime tem 3ª11 abril 2025

Arifureta – From Commonplace to World's Strongest: Anime tem 3ª11 abril 2025 -

The greatest sport you've (probably) never heard of - The ECHO11 abril 2025

The greatest sport you've (probably) never heard of - The ECHO11 abril 2025 -

Chiclete Japonês Coris Pokemon Battle Gun Sun & Moon - 3,5 gramas - Hachi811 abril 2025

Chiclete Japonês Coris Pokemon Battle Gun Sun & Moon - 3,5 gramas - Hachi811 abril 2025 -

NBA Finals 2011: 7 Reasons the Dallas Mavericks Defeated the Miami11 abril 2025

NBA Finals 2011: 7 Reasons the Dallas Mavericks Defeated the Miami11 abril 2025